Since our infrastructure is powered by Openstack, Cinder takes care of exposing our block devices to virtual machines. And because we value open source software, we use Ceph as the storage backend (as well as LVM in certain setups).

In today’s article, I will show you the overview of Ceph architecture, pinpoint its advantages and disadvantages, and show you a demo of Ceph snapshotting to demonstrate its power and the ease of administration.

Ceph Architecture

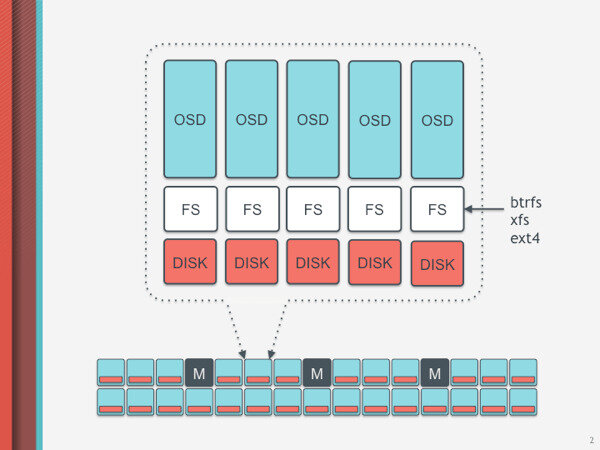

Ceph is a free SDS that implements object storage on a cluster. It is designed to be without single point of failure and fault-tolerant (thanks to RADOS data replication), using commodity hardware. It therefore does not rely on certain vendors and does not require specific hardware support. It’s both self-healing and self-managing, employing special cluster monitor daemons (ceph-mon) that keep track of the active and failed cluster nodes.

The data itself is actually stored on ceph-osds (object storage devices), on which we use btrfs to leverage its built-in copy-on-write capabilities. Ceph OSD default to replica factor of two, so for every 1TB of data, one needs 3TB of capacity. Ceph also recommends that your OSD data, OSD journal, and OS reside on separate disks. So to use Ceph efficiently, one needs sufficient system resources.

Ceph also offers snapshots, which are read-only copies of a FS at certain point in time. What is more, Ceph supports snapshot layering, which allows you to clone images quickly and easily since a snapshot is read-only, so cloning a snapshot makes it possible to create clones fast. Here I present a short demo.

# Creates a snap of pool rbd, image foo, with name snappy

rbd snap create rbd/foo@snappy

# Protect a snapshot in order to be able to clone it. (protects against deletion)

rbd snap protect rbd/foo@snappy

# Clones foo to bar, fast.

rbd clone rbd/foo@snappy rbd/bar

# Unprotect to delete.

rbd snap unprotect rbd/foo@snappy

# And finally delete to clean-up.

rbd snap rm rbd/foo@snappyThe original autor: Michal H., Junior DevOps Engineer, cloudinfrastack